NEWS

NIPS-LLD Workshop 2017

The NIPS-LLD 2017 workshop was held in Long Beach, California, the United States of America from December 8th – 9th, 2017 as one of the several workshops of the 31st conference on Neural Information Processing Systems (NIPS). The LLD workshop, short for “Workshop on Learning with Limited Labeled Data: Weak Supervision and Beyond,” focused on methods that highlight machine learning on datasets where ground truth labels for supervision are not available in abundance. The main topics of interest in the LLD workshop has been:

- Learning from noisy labels

- Distant or heuristic supervision, and

- Data augmentation and/or the use of simulated data.

David Inouye, PhD from the University of Texas at Austin was on his internship with Rakuten Institute Of Technology Boston during the summer of 2017, midway between completing his PhD and joining Carnegie Mellon University as a postdoc under Prof. Pradeep Ravikumar from the Fall of 2017. During this time, we started working on data quality issues for our taxonomy classification projects. Soon, we found out that the usual paradigm of classifier tuning using train/dev/test sets has not been working for improving performance on our ground truth test datasets. The problem that we have been facing relates to the presence of noisy labels in the training data. Although, feature engineering improved classification performance in equally noisy development (dev) sets, the effective performance on the ground truth test data i.e. test data with no noise in the labels, has been decreasing.

To this end, David’s research over the summer had been to address the fundamental issue of choosing model parameters for classifiers, so that they perform reasonably well on ground truth test data, although the training data is noisy at best with regards to labels.

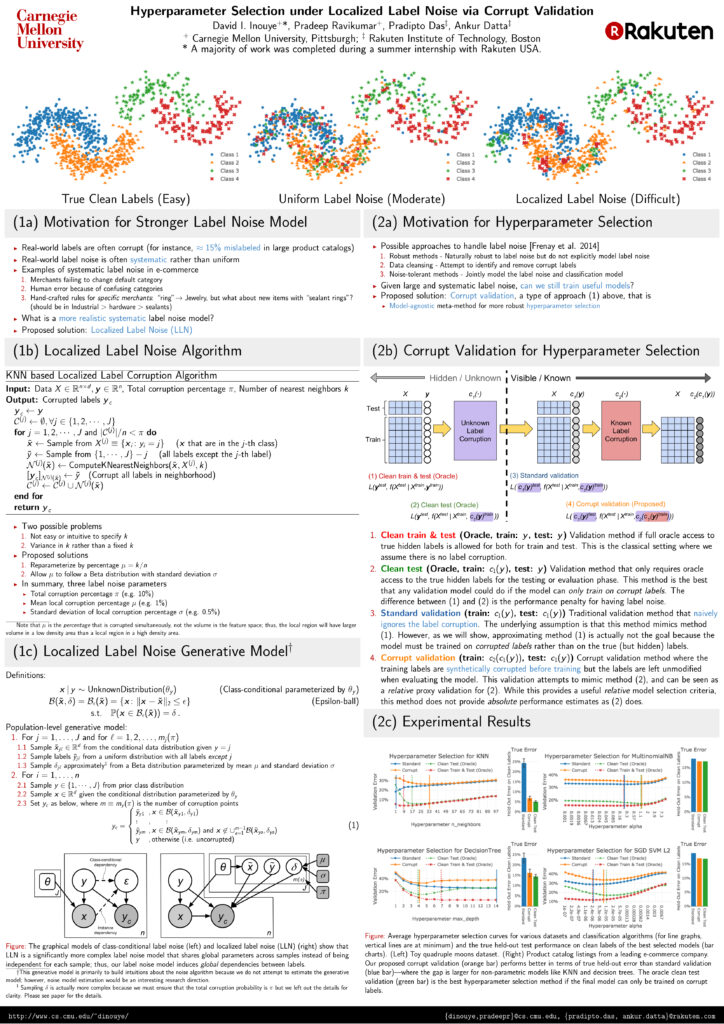

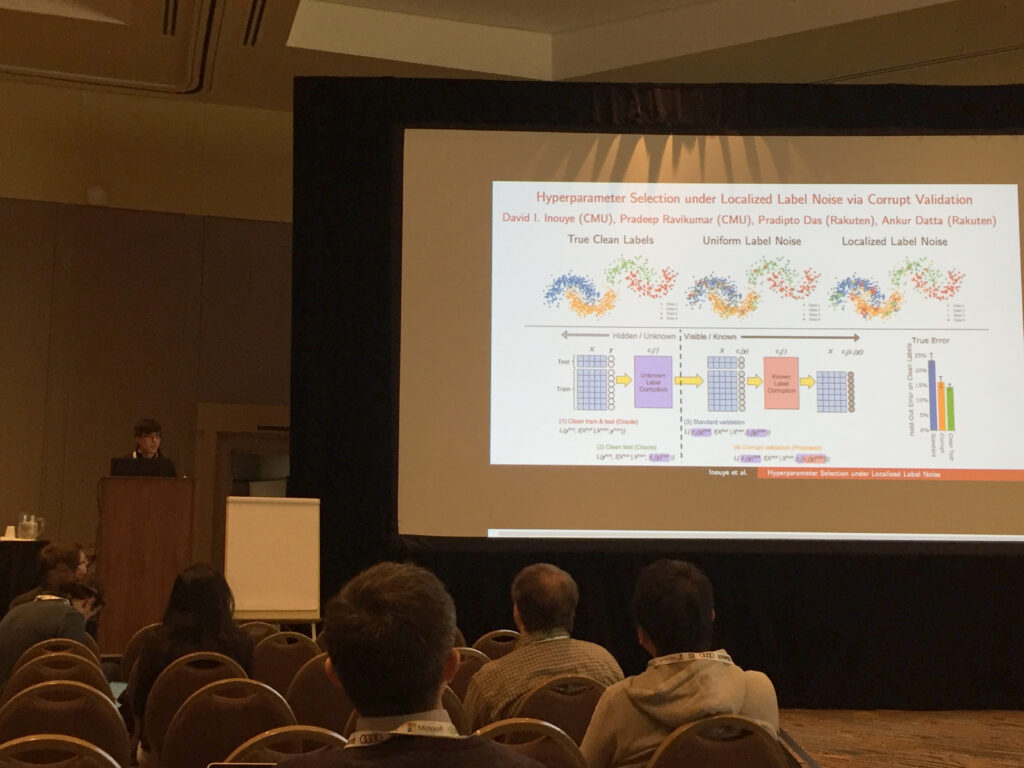

Experiments on synthetic data and a subset of noisy labeled listings from one of our business units eventually resulted in our paper titled, “Hyperparameter Selection under Localized Label Noise via Corrupt Validation.”In this paper, because we do not have access to clean ground-truth classification labels, we develop a method for selecting parameters by synthetically injecting label noise into the training data and show that it performs better than standard approaches in certain settings. To our best knowledge, this synthetic label noise is a novel approach to alleviating the effects of noisy labels in training and opens up new research questions and directions.

Congratulations to David.

https://nips.cc/Conferences/2017

https://lld-workshop.github.io/

https://nips.cc/Conferences/2017/Schedule?showEvent=9478