NEWS

Parsing Floor Plan Images

The Internet has become a great resource for browsing real estate information. Many property listings also contain floor plan images. They contain structural information, such as room shapes and sizes, as well as semantic information, like room types and the location of doors, windows, or kitchen counters.

Researchers at the Rakuten Institute of Technology have been working on automatically understanding these images [1]. Using computer vision, their system detects walls and objects, reads the text labels, and creates a full parametric model of the apartment or house.

Floor plans come in different artistic styles, so it is difficult to hard-code rules for automatic understanding. RIT’s method combines three methods for floor plan understanding:

1. Wall segmentation

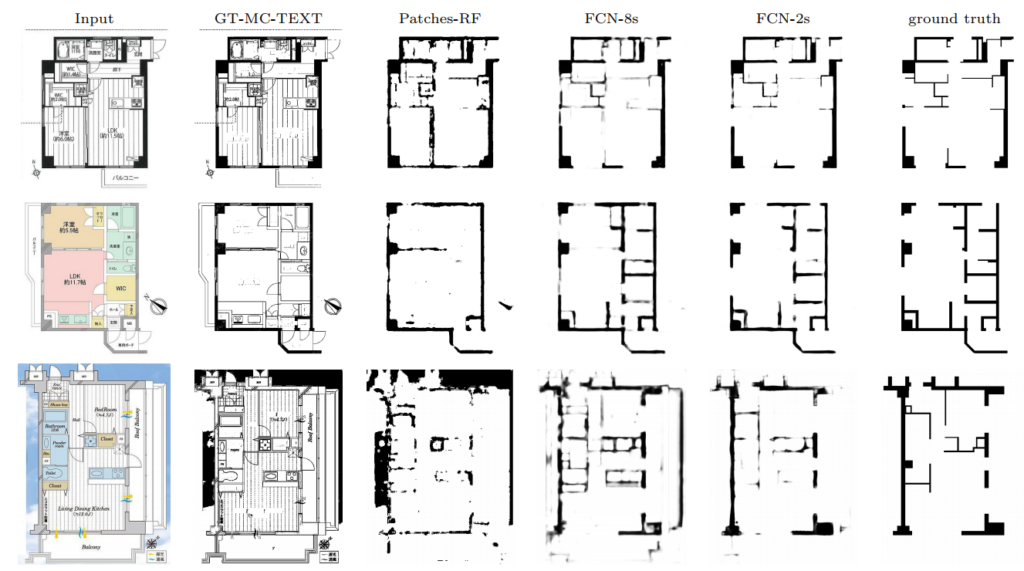

The method takes a learning approach to segment walls, in particular training a fully convolutional network (FCN) [2]. The input to the training algorithm are 500 hand-segmented images, which have been made publicly available for research purposes (R-FP-500 dataset [3]). The method was compared with a global thresholding method as well as a patch-based segmentation method. Researchers also experimented with different FCN architectures and found that including layers with smaller pixel-stride values improve the results. Figure 2 shows wall segmentation results on examples from the dataset.

Segmentations by FCN-8s [2] are more blurred than those by the proposed FCN-2s. The bottom row shows a challenging example on which all methods perform relatively poorly.

2. Object detection

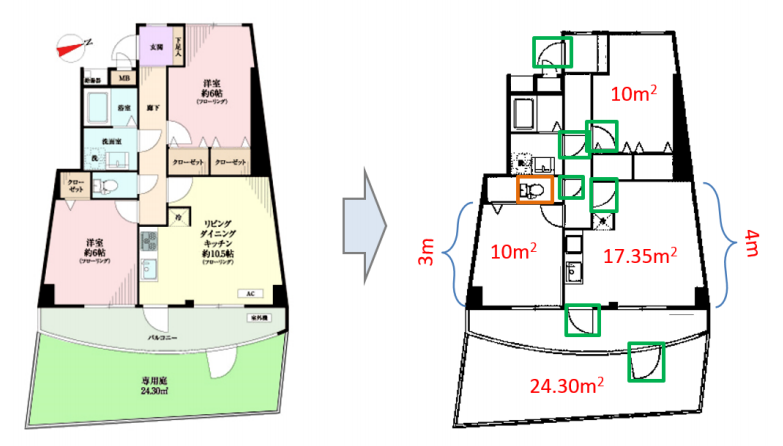

A Faster R-CNN is used for object detection [4]. A subset of images was annotated and the network was trained to detect doors, sliding doors, stoves, bath tubs, sinks, and toilets.

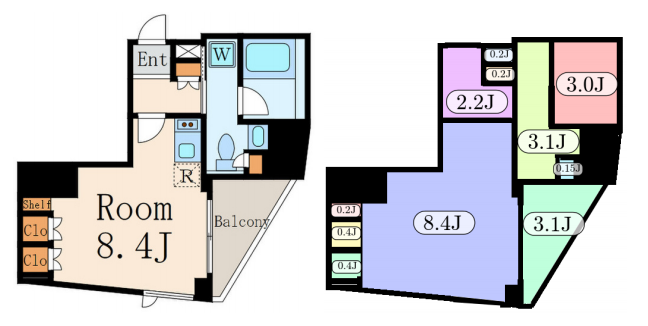

3. Optical character recognition (OCR)

OCR is used to read the sizes of the rooms [5]. In some cases not all rooms have size labels. In these cases, the sizes of known rooms can be propagated to rooms of unknown size, since their relative sizes are known.

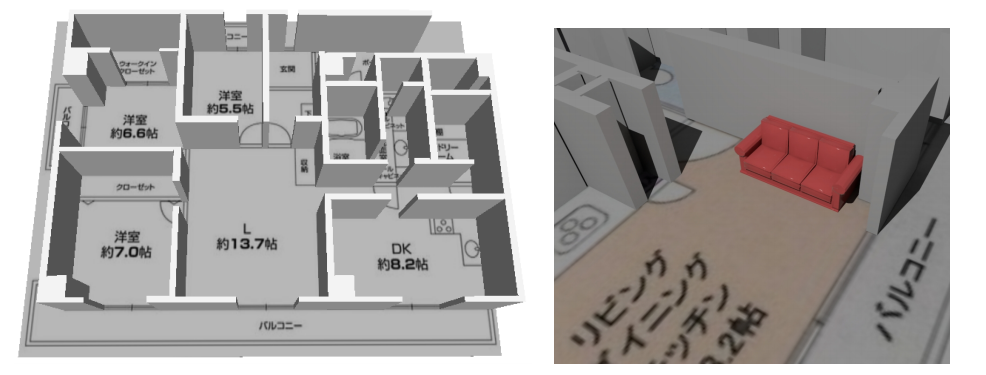

There are many advantages to converting floor plans to this model representation. For one, it allows for easy 3D model creation for virtual walkthroughs or interactive home re-modeling. Further, reading the room sizes in the image allows true-to-scale visualization of furniture in the property.

In summary, RIT has developed a method for converting a floor plan image into a parametric model. This model can be directly used in applications for viewing, planning and re-modeling property.

References:

[1] S. Dodge, J. Xu, B. Stenger, Parsing Floor Plan Images, IAPR Conference on Machine Vision Applications (MVA), May 2017.

[2] E. Shelhamer, J. Long, T. Darrell. Fully convolutional models for semantic segmentation. In Transactions on Pattern Analysis and Machine Intelligence (TPAMI), Vol. 39(4), pages 640-651, April 2016.

[3] R-FP-500 dataset at https://rit.rakuten.com/data_release/

[4] S. Ren, K. He, R. Girshick, J. Sun. Faster R-CNN: Towards real-time object detection with region proposal networks. In Transactions on Pattern Analysis and Machine Intelligence (TPAMI), Vol. 39(6), pages 1137-1149, June 2017.

[5] Google Cloud Vision API for OCR https://cloud.google.com/vision/docs/ocr