NEWS

Overcoming Language Barriers with Translation at ACL

Raymond Susanto, Shamil Chollampatt, and Liling Tan of RIT Singapore had their paper accepted at the 58th Annual Meeting of the Association for Computational Linguistics, also known as ACL. Regarded as the premier conference in the field of computational linguistics, ACL was held from July 5-10 with participants from around the globe and keynote speakers from Columbia University and MIT, respectively.

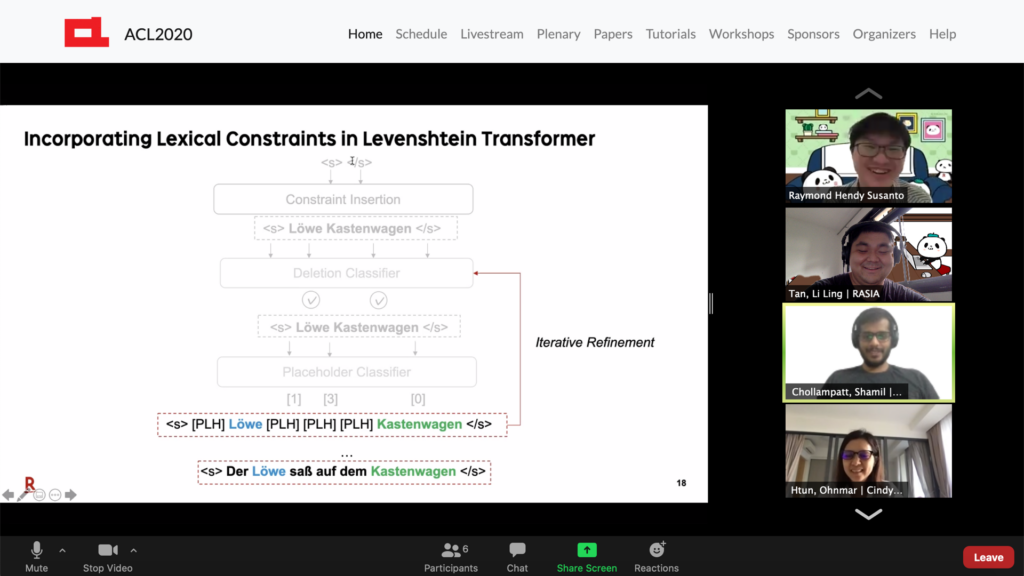

The collaborative RIT paper, “Lexically Constrained Neural Machine Translation with Transformer”, investigates methods to improve lexically-constrained or dictionary-augmented neural machine translation (NMT). The authors recognize that NMT systems can generate high-quality translations but often at the cost of inadequate and inconsistent translations of specific information. Seeking to address this problem, they invoke an effective algorithm for incorporating lexical constraints using the Levenshtein Transformer (LevT) model, an edit-based model that generates target words simultaneously and then deletes or adds new words. In their research, they utilized experiments of English-German translation to demonstrate that this simple and effective approach can guarantee 100% dictionary term usage without harming translation quality or speed. This is an important breakthrough, as previous approaches have either failed to guarantee the term usage or have sacrificed translation speed.

This paper is part of an ongoing project related to the Machine Translation (Dictionary Feature) project in the language program. The project facilitates domain-tailored and customized machine translation that considers user-defined dictionaries at decoding time, ensuring pre-specified translation terms appear in the system output. The broader goal is to empower people and business to translate content and reach users globally.

ACL was conducted virtually this year. Presentations were pre-recorded and accessible online for all participants to view at a convenient time for them. Presenters typically either have a short Q&A or a poster session to present and interact with visitors. However, due to the virtual format, the authors participated in a two 1-hour live Q&A session where they received interesting and constructive feedback that can help guide their future research and direction. This includes word reordering of lexical constraints. The conference was also an opportunity to network with researchers from various places in academia and industry concerned with the field of computational linguistics.

RIT would like to thank ACL as well as all sponsors and presenters at this year’s conference