NEWS

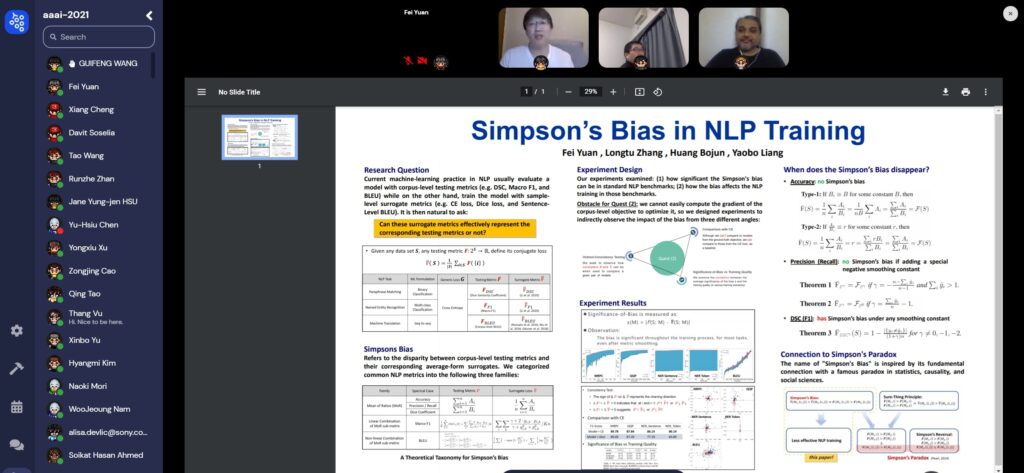

Coining Simpson’s Bias and Finding Best Approaches through Data at AAAI 2021

RIT scientists, Bojun Huang and Longtu Zhang, were invited to present their research findings at this year’s Association for the Advancement of Artificial Intelligence (AAAI)conference.

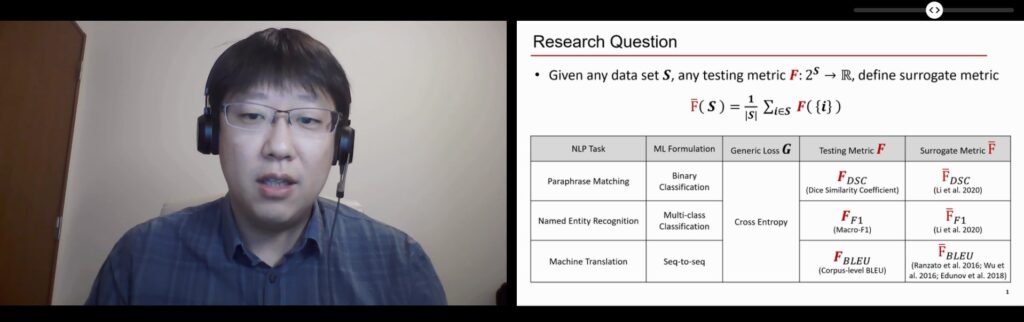

Their paper, Simpson’s Bias in NLP Training, written co-jointly with Fei Yuan and Yaobo Liang, provides an analytical investigation of the disparity between sample level loss in NLP training and population level metrics. They call the disparity, Simpson’s bias, inspired by Simpson’s reversal of inequalities applied in statistics, biology, astronomy, and computational sciences. (Simpson’s reversal is a trend or result when an ordinal information appears in several groups of data but disappears or reverses when combined; the term was made famous in 1973 as part of a gender discrimination lawsuit at University of California, Berkeley.) To derive the right insights and interpretations via data, their research reveals how the bias manifests itself with rather diverse effects in machine learning and between what is optimized and what should be optimized during training. In locating mathematical data where unbiased metrics can be established, the authors call for further studies for better unbiased training in NLP, machine learning, and all deep learning model trainings.

AAAI promotes research in AI and scientific exchange between research, scientists, and students in AI and related fields. The conference was held virtually from February 2-9, 2021. Dr. Huang and Dr. Zhang presented their findings from remote locations.